Stanford CME295 Transformers & LLMs | Autumn 2025 | Lecture 4 - LLM Training

BeginnerKey Summary

- •This lecture explains how we train neural networks by minimizing a loss function using optimization methods. It starts with gradient descent and stochastic gradient descent (SGD), showing how we update parameters by stepping opposite to the gradient. Mini-batches make training faster and add helpful noise that can escape bad spots in the loss landscape called local minima.

- •Momentum speeds up learning by accumulating a velocity that keeps moving in useful directions. If gradients point the same way across steps, momentum takes larger strides; if gradients flip, it slows down. This helps smooth zig-zag paths and speeds convergence on long, sloped valleys.

- •RMSprop adapts the learning rate per parameter using a moving average of squared gradients. Parameters with big gradients get smaller steps; parameters with small gradients get larger steps. This balances learning across the network and improves stability.

- •Adam combines momentum’s first-moment tracking with RMSprop’s second-moment tracking. It also applies bias correction to fix early-step underestimation. With defaults beta1=0.9, beta2=0.999, and epsilon=1e-8, Adam generally works well with minimal tuning.

- •Learning rate decay schedules make training start fast and then carefully fine-tune. Step decay reduces the learning rate by a fixed factor every set number of epochs. Exponential decay shrinks it steadily over time, while cosine annealing smoothly lowers it following a cosine curve.

- •Regularization fights overfitting, which is when a model memorizes training data and fails to generalize. L2 (weight decay) penalizes large weights to keep the model simpler and more stable. L1 encourages sparsity by pushing many weights to be exactly zero.

- •Dropout randomly turns off neurons during training with a set probability p. This prevents the network from relying too much on any single neuron and acts like training many smaller models as an ensemble. At test time, no neurons are dropped.

- •Batch normalization normalizes each layer’s activations using mini-batch mean and variance. After normalizing, learnable scale (gamma) and shift (beta) are applied, with typical initial values gamma=1 and beta=0. This stabilizes training and often allows larger learning rates.

- •Picking the right architecture matters: match network depth and width to problem complexity and data size. Too large a model without enough data can overfit; too small may underfit. Balance capacity with available data and task difficulty.

- •Proper data preprocessing is essential. Normalize or scale input features to similar ranges and remove clear outliers. Neural nets are sensitive to input scales; mismatched scales can slow or break learning.

- •Weight initialization influences early learning and stability. Random initialization (uniform or normal) avoids symmetry, while Xavier (Glorot) and He initializations help keep activation variance steady across layers. Good initialization prevents dead neurons and vanishing signals.

- •Choosing a good learning rate is critical. Too large and training diverges; too small and it takes forever. Combine a sensible base learning rate with a decay schedule for best results.

- •Always monitor training and validation losses. If training loss falls but validation loss rises, that’s overfitting—add regularization or reduce capacity. Use a validation set that mirrors real-world data to tune hyperparameters.

- •Visualizing weights and activations can catch issues early. Extremely large or tiny weights may suggest learning rate or regularization problems. Adjust these settings if you see unhealthy weight patterns.

- •Mini-batches are the practical training unit that balances speed and gradient quality. They make GPU computation efficient and add helpful noise that aids exploration. Batch size also interacts with batch norm and learning rate behavior.

- •Cosine annealing provides a gentle, smooth way to lower the learning rate, often improving final performance. It starts higher, slows gradually, and finishes with very small steps. This helps the model settle into a good minimum.

- •Adam’s popularity comes from its robustness and low need for tuning. It adapts per-parameter steps and benefits from both momentum-like smoothing and RMS-like scaling. In many cases, it’s a strong default choice for training neural nets.

Why This Lecture Matters

Training neural networks well is central to almost every modern AI application, from image classification to language modeling. If you understand optimizers like Momentum, RMSprop, and Adam, you can make models learn faster, more stably, and with less trial-and-error. Learning rate schedules enable you to sprint early and fine-tune late, often achieving higher accuracy without extra data. Regularization methods such as L1, L2, and Dropout directly tackle overfitting, which is one of the biggest obstacles to real-world deployment; they help models perform reliably on new data. Batch normalization further stabilizes and speeds training, allowing you to use more aggressive learning rates and deeper architectures. This knowledge is useful for roles like machine learning engineer, data scientist, and research scientist, as well as software engineers adding ML features to products. It solves practical problems like unstable training runs, models that memorize but don’t generalize, and long tuning cycles with little progress. Applying these techniques can reduce compute costs, shorten development time, and improve system reliability. In your projects, start with Adam, pair it with a sensible learning rate decay (cosine annealing is a strong choice), add L2 and dropout if overfitting appears, and use batch norm to improve stability. Monitor training and validation curves to guide decisions rather than guessing. Mastering these essentials strengthens your career foundation, since nearly all deep learning work depends on getting training right. In today’s industry, where models are larger and data is abundant, efficient and robust training is a competitive advantage that directly impacts performance and time-to-market.

Lecture Summary

Tap terms for definitions01Overview

This lecture teaches you how to effectively train artificial neural networks by walking through core optimization algorithms, learning rate strategies, regularization methods, and stability techniques used in practice. It begins with a short recap of gradient descent (GD) and stochastic gradient descent (SGD), which update model parameters by moving in the opposite direction of the gradient of a loss function. You will see why mini-batches are the practical way to compute gradients and how the noise they introduce can help a model escape poor local minima. Then it moves into more advanced optimizers—Momentum, RMSprop, and Adam—explaining how each one improves training speed and stability by smoothing or adapting learning steps.

Next, the lecture covers learning rate decay schedules. These schedules start with a relatively large learning rate so training can make fast progress early on, and then reduce the learning rate over time to refine the solution. You’ll learn three common methods: step decay (reduce by a fixed factor every few epochs), exponential decay (shrink continuously by a constant rate per epoch), and cosine annealing (smoothly decreasing the rate following a cosine curve from a maximum to a minimum). Each method provides a different shape of decrease, and the idea is to achieve both quick initial learning and careful fine-tuning near the end.

Regularization is another big topic in this lecture. Regularization is crucial when a model might memorize the training data and not generalize well to new data, a problem known as overfitting. You’ll learn L2 regularization (also called weight decay), which encourages small weights by adding a penalty on the sum of squared weights, and L1 regularization, which encourages sparsity by penalizing the sum of absolute values of weights. The lecture also explains Dropout, a method where you randomly set some neurons’ outputs to zero during training. Dropout acts as a kind of ensemble, preventing any single neuron from dominating and improving generalization; at test time, no neurons are dropped.

To improve training stability and speed, the lecture introduces batch normalization. Batch normalization normalizes the activations of each layer using the mean and variance computed over the current mini-batch. After normalization, it applies a learned scale (gamma) and shift (beta), typically starting at gamma=1 and beta=0. This process allows the model to stabilize intermediate values in the network, often enabling larger learning rates and faster convergence.

The lecture closes with practical, experience-based tips. Choose an architecture appropriate to the task and the amount of data: deeper and wider networks are more powerful but require more data and regularization. Preprocess inputs by normalizing or scaling features and removing obvious outliers because neural networks are sensitive to feature scales. Initialize weights properly—randomly and with methods like Xavier or He initialization—to maintain healthy signal flow. Pick a reasonable learning rate and consider adding a decay schedule. Monitor training and validation losses to detect overfitting, visualize weights to catch issues early, and always maintain a representative validation set for tuning hyperparameters like learning rate and regularization strength.

Who is this lecture for? It is aimed at beginners to intermediate learners who have basic familiarity with machine learning and neural networks (e.g., knowing what a loss function is and what a model’s parameters are). You do not need deep math to benefit, but comfort with gradients and the idea of optimization helps. After completing the lecture, you will be able to pick and configure optimizers like Momentum, RMSprop, and Adam; design and apply learning rate decay schedules; use regularization techniques such as L1, L2, and Dropout; apply batch normalization; and follow a practical training workflow with data preprocessing, weight initialization, monitoring, and validation.

The lecture is structured as follows: it starts with a recap of basic gradient-based training and mini-batch SGD. It then introduces three optimizers—Momentum, RMSprop, and Adam—explaining their update rules and intuitions. Next come learning rate decay strategies, including step, exponential, and cosine annealing, with their formulas and roles. After that, it dives into regularization: L2, L1, and Dropout, with the effects each has on weights and generalization. Then it explains batch normalization and why it improves training stability and performance, including typical initialization of its parameters. Finally, it wraps up with a checklist of practical training tips: architecture selection, preprocessing, initialization, learning rate choice, monitoring, weight visualization, and using a proper validation set.

02Key Concepts

- 01

Loss Function and Optimization Goal: A loss function measures how wrong the network’s predictions are, and training means finding parameters (theta) that minimize this loss. We need a clear target to improve on step by step; the loss function gives that feedback. Technically, we move parameters in the direction that reduces the loss, guided by gradients. Without this setup, training would have no direction and could not systematically improve. For example, for classification, cross-entropy loss helps guide the network to assign higher probabilities to the correct class.

- 02

Gradient Descent (GD): Gradient descent updates parameters by stepping opposite to the gradient of the loss. It’s like walking downhill on a landscape using the local slope to guide you. Formally, theta := theta − eta * grad(L(theta)), where eta is the learning rate. Without GD, we would not have a systematic way to reduce loss; guesswork would be inefficient. For example, running GD on a small toy dataset steadily decreases loss with each step if the learning rate is sensible.

- 03

Stochastic Gradient Descent (SGD) with Mini-Batches: SGD approximates the full gradient using a small random batch of examples each step. It’s like estimating the direction of a hill’s slope by sampling a few points instead of the entire mountain. Mathematically, grad(L(theta)) is approximated by grad over batch B, making updates faster and adding helpful noise. Without mini-batches, training on large datasets would be too slow and memory-intensive. For instance, using batch size 64 reduces compute per step and often leads to quicker progress per unit time.

- 04

Backpropagation: Backpropagation efficiently computes gradients of the loss with respect to all parameters by applying the chain rule layer by layer. Picture passing error signals backward through the network like tracing blame for a mistake from the output to earlier steps. Technically, it reuses intermediate values stored during the forward pass to compute derivatives in reverse. Without backprop, gradient computation would be too slow to be practical for deep nets. For example, using backprop on a 5-layer MLP makes it feasible to train on thousands of images in minutes.

- 05

Momentum: Momentum adds a velocity that accumulates past gradients to keep moving in stable directions. It’s like pushing a heavy shopping cart: once moving, it resists small bumps and keeps rolling. The update is v := muv − etagrad, then theta := theta + v, where mu (e.g., 0.9) controls how much history we keep. Without momentum, updates can zig-zag across narrow ravines and progress slowly. For example, momentum smooths noisy gradients and speeds convergence on long, gently sloping directions.

- 06

RMSprop: RMSprop adapts learning rates per parameter using a moving average of squared gradients. Imagine giving shorter steps to parts that stumble a lot and longer steps to parts that move smoothly. It maintains S := betaS + (1−beta)(grad^2) and updates theta using eta / sqrt(S+epsilon) * grad. Without RMSprop, some parameters may dominate or lag, causing unstable learning. For example, RMSprop calms parameters with consistently large gradients to prevent divergence.

- 07

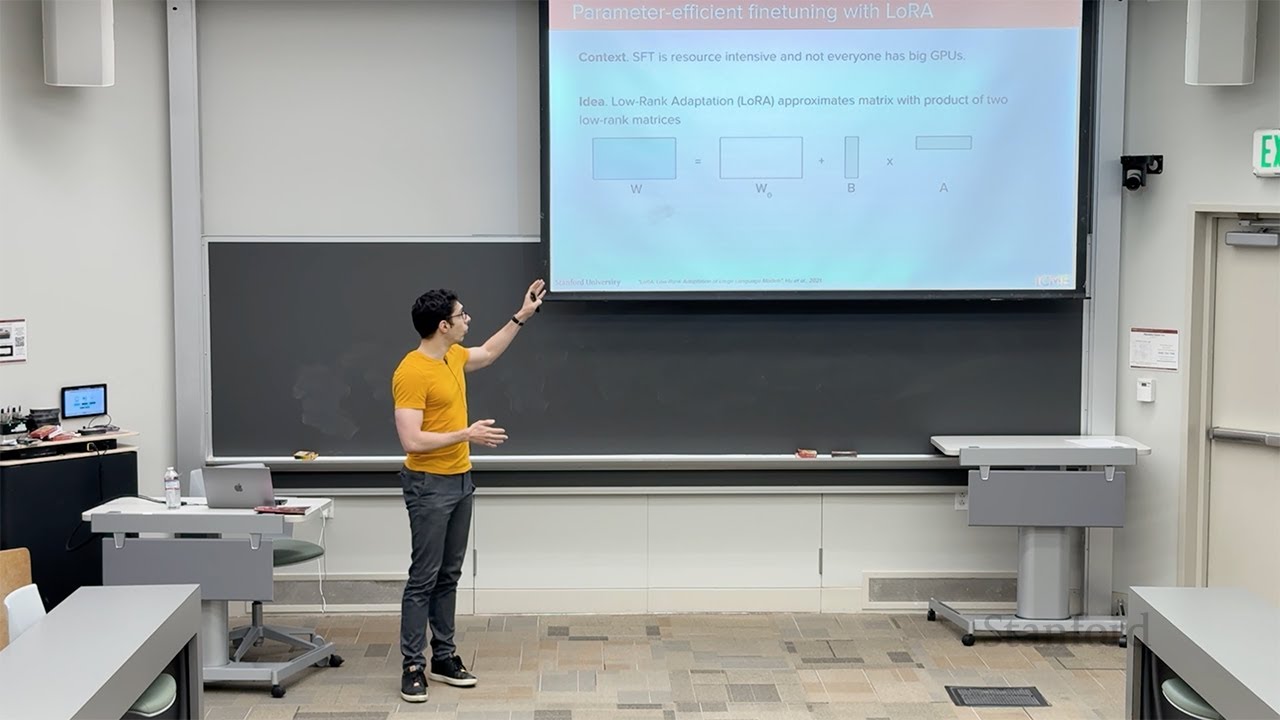

Adam Optimizer: Adam combines momentum-like first-moment tracking with RMSprop-like second-moment scaling, plus bias correction. It’s like having both speed control and traction control on a car. It keeps M := beta1M + (1−beta1)grad and V := beta2V + (1−beta2)(grad^2), then uses bias-corrected M_hat, V_hat to update theta. Without Adam, you might have to hand-tune learning rates a lot more; Adam often works well with defaults (beta1=0.9, beta2=0.999, epsilon=1e−8). For example, Adam usually converges quickly across many tasks with minimal hyperparameter fiddling.

- 08

Learning Rate Decay (General Idea): Learning rate decay reduces the step size over training so you start fast and finish carefully. It’s like starting a race sprinting and then slowing down to take careful steps near the finish line. Any schedule works as long as it meaningfully decreases the learning rate over time. Without decay, you might overshoot good solutions or get stuck at a suboptimal plateau. For example, a high initial rate explores widely, then a lower rate fine-tunes around a promising area.

- 09

Step Decay: Step decay multiplies the learning rate by a fixed factor every K epochs (e.g., divide by 10 every 30 epochs). It’s like going down stairs—flat for a while, then a sudden drop. This is easy to implement and often effective in staged training. Without these scheduled drops, the model might not settle into a good minimum. For example, a schedule at epochs 30, 60, and 90 often improves accuracy on image tasks.

- 10

Exponential Decay: Exponential decay shrinks the learning rate continuously by a constant ratio per epoch. It’s like smoothly turning down a dimmer switch. The formula is eta_t = eta_0 * k^t, with k between 0 and 1. Without a smooth decay, the learning rate could remain too aggressive late in training. For example, using k=0.95 steadily reduces step sizes each epoch to stabilize updates.

- 11

Cosine Annealing: Cosine annealing lowers the learning rate following a half-cosine from a maximum to a minimum over T_max epochs. It’s like gently rolling down a smooth hill rather than stepping down stairs. The learning rate is eta(t) = eta_min + 0.5*(eta_max−eta_min)(1+cos(pit/T_max)). Without smooth decay, late training can be jittery; cosine annealing often yields better final performance. For example, setting eta_max high at the start and decaying to eta_min near zero often leads to strong convergence.

- 12

Regularization (Concept): Regularization techniques reduce overfitting by discouraging overly complex models that memorize training data. It’s like adding rules that prevent cheating on a test. Approaches include L2 (weight decay), L1 (sparsity), and Dropout (randomly disabling neurons during training). Without regularization, validation performance typically degrades as the model memorizes. For example, with too many parameters and no regularization, training accuracy soars while test accuracy stalls or drops.

- 13

L2 Regularization (Weight Decay): L2 adds a penalty proportional to the sum of squared weights, encouraging smaller, smoother weights. Imagine stretching a rubber band that pulls weights toward zero but not all the way. The loss becomes L(theta) + (lambda/2)*||theta||^2, which nudges updates to shrink weights slightly each step. Without L2, weights can grow very large and make the model unstable and less general. For example, adding L2 often improves test accuracy by reducing sensitivity to noise.

- 14

L1 Regularization: L1 penalizes the sum of absolute values of weights, encouraging many weights to become exactly zero (sparse). It’s like pruning branches so only the strongest remain. The loss is L(theta) + lambda*||theta||_1, which can zero out unhelpful features. Without L1, the model may spread small weights everywhere and be harder to interpret. For example, L1 can select a small subset of informative inputs in high-dimensional data.

- 15

Dropout: Dropout randomly sets neuron outputs to zero during training with probability p. It’s like having some teammates sit out randomly so no single person gets all the responsibilities. This prevents co-adaptation and acts like training many thinned networks; at test time, no neurons are dropped. Without dropout, large networks can overfit easily by memorizing patterns. For example, p=0.5 in a hidden layer often boosts generalization on image and text tasks.

- 16

Batch Normalization: Batch norm normalizes activations using mini-batch mean and variance, then applies learnable scale gamma and shift beta. It’s like standardizing test scores in a class, then letting the teacher rescale if needed. This stabilizes intermediate distributions, often allowing larger learning rates and faster training. Without batch norm, training can be sensitive to initialization and learning rate choices. For example, initializing gamma=1 and beta=0 makes the layer start as pure normalization and then adapt.

- 17

Weight Initialization: Good initialization avoids symmetry and keeps signals flowing with the right variance. It’s like starting a race from a fair, balanced position rather than stuck in a ditch. Random uniform/normal breaks symmetry; Xavier (Glorot) and He initializations further keep activation variances stable across layers. Without careful initialization, gradients may vanish or explode, stalling learning. For example, He initialization works well with ReLU-type activations.

- 18

Validation and Monitoring: A validation set estimates how well the model will do on new data and guides hyperparameter tuning. It’s like a rehearsal before the real performance. Track training and validation loss curves; if training loss falls while validation loss rises, overfitting is happening. Without validation, you may choose hyperparameters that look good on training but fail in the real world. For example, pick learning rate and regularization strength by selecting what minimizes validation loss.

03Technical Details

Overall Architecture/Structure of Training

- Objective and Data Flow

- We define a loss function L(theta) that measures how far the network’s predictions are from targets. Training data is processed in mini-batches. For a mini-batch B, we perform a forward pass to compute outputs and the batch loss L_B(theta). Then, we run backpropagation to compute the gradient of the loss with respect to parameters, grad L_B(theta). Finally, we update parameters according to an optimization rule. This loop repeats over many epochs (full passes through the training dataset).

- Gradient Descent and Stochastic Gradient Descent

- Gradient Descent (GD): theta := theta − eta * grad L(theta), where eta is the learning rate. This is exact only if we compute the gradient on the whole dataset each step (which is slow). Stochastic Gradient Descent (SGD) approximates this by using a mini-batch. For a mini-batch B, we compute grad L_B(theta), and update theta := theta − eta * grad L_B(theta). The mini-batch size controls the trade-off between noisy gradients (small batches) and better gradient estimates (large batches). Noise in SGD can help escape local minima or saddle points because it prevents the optimizer from getting stuck in flat or shallow areas.

- Momentum Optimizer

- Motivation: Vanilla SGD can zig-zag across narrow valleys where gradients alternate signs across dimensions. Momentum adds memory of past updates to smooth the trajectory.

- State and Updates: Maintain velocity v, initialized to zero. Each step: v := mu * v − eta * grad L_B(theta). Then theta := theta + v. The hyperparameter mu (momentum coefficient) is often around 0.9. Intuition: If grads mostly point the same way, v grows in magnitude, taking larger steps. If grads reverse, the previous v opposes the change, damping oscillations. Momentum effectively acts like an exponentially weighted average of past gradients scaled by eta.

- RMSprop Optimizer

- Motivation: Different parameters may have gradients of very different magnitudes. A single global learning rate can be too big for some and too small for others.

- State and Updates: Maintain S (same shape as theta) as an exponential moving average of squared gradients: S := beta * S + (1 − beta) * (grad^2) (element-wise square). Update parameters using a normalized gradient: theta := theta − eta * grad / sqrt(S + epsilon). Here, epsilon is a small constant (e.g., 1e−8) added for numerical stability to avoid division by zero. Intuition: If a parameter’s gradient is consistently large, S grows and reduces its effective step size; if small, S shrinks and increases the step.

- Adam Optimizer

- Motivation: Combine momentum’s first-moment averaging and RMSprop’s second-moment scaling to get robust, adaptive updates with minimal tuning.

- State: Keep M (first moment of gradients) and V (second moment of gradients) for each parameter. Initialization usually M=0, V=0. At step t: M := beta1 * M + (1 − beta1) * grad. V := beta2 * V + (1 − beta2) * (grad^2). Bias correction: M_hat := M / (1 − beta1^t), V_hat := V / (1 − beta2^t). Update: theta := theta − eta * M_hat / (sqrt(V_hat) + epsilon). Defaults: beta1=0.9, beta2=0.999, epsilon=1e−8. Intuition: M captures direction (smoothed gradient), V adjusts step size per parameter (like local curvature sensitivity), and bias correction fixes the early-step underestimation caused by initializing M and V at zero.

- Learning Rate Decay Schedules

- Why decay? Early in training, larger steps help explore and move quickly toward a good area; later, smaller steps allow precise adjustment near a minimum.

- Step Decay: eta := eta * gamma at specified epochs (e.g., every 30 epochs), with gamma < 1 (like 0.1). Pros: simple, known strong baselines. Cons: sudden changes may destabilize briefly.

- Exponential Decay: eta_t = eta_0 * k^t with 0 < k < 1. Pros: smooth and continuous. Cons: need to pick k carefully so it neither decays too fast nor too slow.

- Cosine Annealing: eta(t) = eta_min + 0.5 * (eta_max − eta_min) * (1 + cos(pi * t / T_max)), for t in [0, T_max]. Pros: smooth start and smooth finish; often yields better final performance. Cons: need to choose eta_min, eta_max, and T_max thoughtfully; typical to pick eta_min near zero and eta_max as your initial learning rate.

- Regularization Methods

- Purpose: Limit model complexity and improve generalization by discouraging overfitting to training data.

- L2 Regularization (Weight Decay): Modify the loss to L_total = L(theta) + (lambda/2) * ||theta||^2, where ||theta||^2 is the sum of squared weights. The gradient of the L2 term is lambda * theta, so each update shrinks weights slightly, pulling them toward zero. This reduces variance in predictions and makes the model more robust to noise.

- L1 Regularization: Modify the loss to L_total = L(theta) + lambda * ||theta||_1, where ||theta||_1 is the sum of absolute weight values. The subgradient for a weight w is lambda * sign(w) (or a value in [−lambda, lambda] when w=0). This tends to push many weights exactly to zero, producing sparse models that can be easier to interpret and more efficient.

- Dropout: During training, independently set each neuron’s output to zero with probability p (e.g., p=0.5 for a hidden layer). This prevents neurons from co-adapting and forces the network to distribute learned representations. At test time, no units are dropped. In code, you either scale activations during training (inverted dropout) or at test time so that expected activations match.

- Batch Normalization

- Purpose: Stabilize and speed training by normalizing intermediate activations.

- Per mini-batch and per activation channel (or per neuron), compute mean μ_B and variance σ_B^2. Normalize: x_hat = (x − μ_B) / sqrt(σ_B^2 + epsilon). Then apply learned scale and shift: y = gamma * x_hat + beta. Initialize gamma=1 and beta=0 so the layer initially just normalizes. Over training, gamma and beta let the network choose the optimal scale and offset. Benefits include more stable gradients, the ability to use higher learning rates, and often improved generalization.

- Practical Training Tips

- Architecture Selection: Match model capacity (layers and neurons) to data size and task complexity. Too small: underfitting; too large: overfitting without sufficient regularization and data.

- Data Preprocessing: Normalize or scale features to similar ranges (e.g., zero mean, unit variance). Remove or limit the influence of obvious outliers that can skew gradients. Neural nets are sensitive to feature scale; consistent scales make optimization more stable and efficient.

- Weight Initialization: Avoid all-zeros initialization because it makes all neurons compute the same thing and gradients identical, preventing learning. Random uniform/normal breaks symmetry. Xavier (Glorot) initialization scales weights based on fan-in and fan-out to keep activation variance steady, commonly for tanh/sigmoid. He initialization scales weights for ReLU-like activations to keep forward and backward variances healthy.

- Learning Rate Selection: Start with a reasonable base rate (e.g., 1e−3 for Adam, task-dependent for SGD) and consider a decay schedule. Too high causes divergence or chaotic loss; too low slows learning excessively.

- Monitoring: Track both training and validation losses. If training improves while validation worsens, apply stronger regularization, reduce capacity, or gather more data. Visualize weights and sometimes activations; extremely large or tiny weights suggest adjusting learning rate or regularization.

- Validation Set: Reserve a representative validation set to tune hyperparameters (learning rate, regularization strength, dropout rate, etc.). This set should reflect the data your model will see in the real world.

Implementation Details and Execution Flow (Pseudo-Workflow)

- Initialize: Choose architecture (layers, neurons), activation functions, and loss. Initialize weights (e.g., He for ReLU), biases to small values (often zero). Set optimizer (e.g., Adam with defaults). Pick an initial learning rate and a learning rate decay schedule.

- Preprocess Data: Normalize or standardize inputs; optionally clip or handle outliers. Split data into training and validation sets.

- Training Loop per Epoch: a) Shuffle training data and form mini-batches (e.g., batch size 32–256). b) For each mini-batch B: do forward pass to compute predictions and loss L_B. c) Run backprop to compute gradients of L_B with respect to all parameters. d) Update optimizer state (e.g., M, V in Adam; S in RMSprop; v in Momentum) and then update parameters. e) Optionally, apply batch normalization and dropout during forward passes as configured (dropout only during training).

- End of Epoch: Compute training loss/accuracy and validation loss/accuracy. Adjust learning rate if using step decay at scheduled epochs; otherwise, the scheduler updates it automatically. Check for overfitting signs and adjust regularization or capacity if needed.

- Repeat: Continue for T_max epochs or until convergence criteria (e.g., validation stops improving). Save the best model based on validation metrics.

Tools and Libraries (Conceptual)

- Although not shown in code here, in practice you would use a deep learning library like PyTorch or TensorFlow. These frameworks provide automatic differentiation (backprop), built-in optimizers (SGD, Momentum, RMSprop, Adam), learning rate schedulers (step, exponential, cosine), regularization utilities (weight decay parameter in optimizers), dropout and batch norm layers, and monitoring tools (TensorBoard or custom logging).

Tips and Warnings

- Learning Rate Pitfalls: If loss explodes or becomes NaN, reduce learning rate, check for too-small epsilon in RMS/Adam, or reduce batch size. Try gradient clipping if gradients explode.

- Regularization Balance: Too much regularization leads to underfitting; too little causes overfitting. Tune lambda (L1/L2) and dropout p on the validation set.

- Batch Size Interactions: Larger batches yield smoother gradients but may generalize slightly worse; smaller batches add noise which can help escape sharp minima. Batch norm’s behavior depends on batch size; too tiny batches may give noisy statistics.

- Initialization Mismatches: Using Xavier with ReLU may cause vanishing activations; prefer He for ReLU families. Check early-layer activation histograms to verify healthy signal propagation.

- Schedule Sensitivity: Step decay requires choosing the right epochs for drops; exponential decay needs a sensible k; cosine annealing needs well-chosen eta_max, eta_min, and T_max. If convergence stalls late, lower the floor (eta_min) or extend T_max.

- Validation Leakage: Never tune on the test set; only use validation for hyperparameters. Keep the validation set distribution close to the real deployment setting.

Putting It All Together

- A solid baseline recipe: preprocess inputs (standardize), initialize with He (for ReLU networks), use Adam (beta1=0.9, beta2=0.999, epsilon=1e−8) with an initial learning rate around 1e−3, and apply a cosine annealing schedule over your planned epochs. Add L2 weight decay and dropout if the model is large or overfits. Include batch normalization to stabilize training and allow a higher starting learning rate. Monitor training/validation curves, adjust regularization and learning rate if validation worsens, and always select final hyperparameters based on validation performance.

This end-to-end approach mirrors what practitioners do daily and reflects the lecture’s central message: combine the right optimizer, learning rate strategy, regularization, and normalization with careful monitoring to train neural networks that not only fit the training data but also generalize well.

04Examples

- 💡

Mini-Batch SGD Example: Input: a dataset of 50,000 images. Processing: form batches of 64 images; for each batch, compute the forward pass, loss, and gradient; update parameters using SGD with learning rate 0.01. Output: parameters change a little per batch, and the training loss trends downward over epochs. Key point: mini-batches speed up computation and the noise in the gradient helps explore the loss surface.

- 💡

Momentum on a Narrow Valley: Input: a 2D loss surface shaped like a long, narrow valley. Processing: apply momentum with mu=0.9 so velocity builds along the valley direction while damping oscillations across it. Output: the path quickly aligns with the valley and moves faster toward the minimum. Key point: momentum reduces zig-zagging and accelerates convergence along consistent directions.

- 💡

RMSprop Per-Parameter Adaptation: Input: a network where one weight consistently sees large gradients while another sees small ones. Processing: maintain S for each parameter; the large-gradient parameter’s S grows, shrinking its step size; the small-gradient parameter’s S stays smaller, keeping steps larger. Output: both parameters update at balanced rates. Key point: RMSprop prevents instability from big gradients while ensuring small-gradient parameters still learn.

- 💡

Adam with Bias Correction Early Steps: Input: starting training from scratch with M=0 and V=0. Processing: Adam’s bias correction computes M_hat and V_hat to avoid underestimating the true moments in the first few steps. Output: stable and appropriately scaled updates even at the beginning of training. Key point: bias correction is crucial so early updates are not too conservative.

- 💡

Step Decay Schedule: Input: initial learning rate 0.1, with drops by 10x at epochs 30 and 60. Processing: train normally; at epoch 30, the LR goes to 0.01; at epoch 60, to 0.001. Output: sharp improvements in validation accuracy often occur soon after each drop. Key point: staged reductions allow fast early learning and careful fine-tuning later.

- 💡

Exponential Decay Schedule: Input: initial learning rate 0.01 and decay rate k=0.95 per epoch. Processing: each epoch multiplies LR by 0.95; by epoch 20, LR is approximately 0.0036. Output: training steps become progressively gentler. Key point: smooth decay can stabilize convergence without abrupt changes.

- 💡

Cosine Annealing Schedule: Input: eta_max=0.1, eta_min=0.0001, T_max=100 epochs. Processing: the LR follows a cosine curve from 0.1 down to 0.0001 over 100 epochs. Output: smooth early progress and calm late-stage fine-tuning with improved final accuracy. Key point: the smooth shape of cosine annealing often yields better minima.

- 💡

L2 Regularization Effect: Input: a model beginning to overfit (training loss decreasing, validation loss increasing). Processing: add L2 with lambda=1e−4 to the loss; updates now include a small shrinkage proportional to weights. Output: weights become smaller on average, validation loss stabilizes or decreases. Key point: weight decay discourages overly large, unstable weights and improves generalization.

- 💡

L1 Regularization for Sparsity: Input: a model with many small, noisy weights. Processing: add L1 with lambda tuned on validation; updates push many tiny weights to exactly zero. Output: a sparser model that may be more interpretable and faster. Key point: L1 acts as feature selection by pruning unhelpful connections.

- 💡

Dropout in a Hidden Layer: Input: a two-hidden-layer MLP with 512 neurons per layer. Processing: apply dropout with p=0.5 during training; each mini-batch randomly turns off half the neurons in those layers. Output: improved validation performance compared to no dropout, especially in over-parameterized settings. Key point: dropout prevents co-adaptation and acts like training an ensemble of thinned networks.

- 💡

Batch Normalization Stabilization: Input: a deep network where gradients are unstable and training is sensitive to learning rate. Processing: insert batch norm after linear layers: normalize activations per mini-batch, then scale/shift with gamma and beta (initialized 1 and 0). Output: smoother loss curve and ability to use a higher learning rate. Key point: batch norm normalizes intermediate signals and makes optimization easier.

- 💡

Monitoring Validation to Detect Overfitting: Input: training and validation loss logs over epochs. Processing: observe training loss continues to drop, but validation loss bottoms out and then rises. Output: decision to increase regularization (e.g., stronger L2, add dropout) or reduce model size. Key point: the divergence of training and validation curves signals overfitting and guides corrective action.

05Conclusion

This lecture provided a practical roadmap for training neural networks effectively and robustly. It began with the core idea of minimizing a loss function using gradients, comparing full gradient descent with mini-batch SGD. It then introduced momentum to speed learning along stable directions, RMSprop to adapt learning rates per parameter based on squared gradients, and Adam to combine the strengths of both with bias correction—often a strong default optimizer. Learning rate schedules—step, exponential, and cosine annealing—were explained as tools to start training fast and finish carefully, with cosine annealing offering a particularly smooth and effective curve.

To combat overfitting, the lecture highlighted regularization methods. L2 (weight decay) keeps weights small and stable, L1 encourages sparsity by zeroing out many weights, and Dropout randomly disables neurons during training to reduce co-adaptation and improve generalization. Batch normalization further stabilizes and speeds training by normalizing activations per mini-batch and then learning the best scale and shift, with gamma initialized to 1 and beta to 0.

Finally, a set of practical tips tied everything together: choose an architecture that matches your data and task complexity; preprocess inputs through normalization and outlier handling; initialize weights with methods like Xavier or He to maintain healthy activation variance; pick a reasonable learning rate and pair it with a decay schedule; and continuously monitor both training and validation losses. Visualize weights to spot extremes and use a representative validation set for hyperparameter tuning, ensuring that your model’s improvements hold up on data it hasn’t seen.

The overarching message is that successful training is a combination of the right optimizer, a thoughtful learning rate strategy, appropriate regularization, and stability techniques like batch normalization, all guided by careful monitoring on a validation set. By following these principles, you can train networks that learn quickly, avoid common pitfalls, and most importantly, generalize well to new data.

Key Takeaways

- ✓Start with a clear training loop: preprocess data, initialize weights well, pick a strong optimizer (often Adam), and choose a sensible initial learning rate. Use mini-batches to balance speed and gradient quality. Track both training and validation metrics every epoch. Save the best model based on validation performance.

- ✓Use momentum to smooth and accelerate learning, especially when gradients zig-zag. A momentum coefficient around 0.9 is a common starting point. Watch for overshooting if the learning rate is high. If training oscillates, slightly reduce the learning rate or momentum.

- ✓Adopt RMSprop or Adam when gradients vary widely across parameters. They adapt per-parameter learning rates automatically. Set epsilon to a small positive value (e.g., 1e−8) for stability. If training is unstable, try reducing the base learning rate before changing other settings.

- ✓Adam is a robust default: beta1=0.9, beta2=0.999, epsilon=1e−8 often work well. Begin with a base learning rate around 1e−3 and adjust based on loss curves. If loss plateaus early, try a slightly higher rate or a better schedule. If loss spikes, lower the learning rate.

- ✓Always use a learning rate schedule: step, exponential, or cosine annealing. Cosine annealing provides smooth decay and often better final accuracy. Step decay is simple and effective for staged training. Exponential decay is a good smooth alternative if step timing is unclear.

- ✓Apply L2 regularization (weight decay) to discourage overly large weights, improving stability and generalization. Start with small values like 1e−4 to 1e−5. If overfitting persists, increase L2 or add dropout. Balance regularization to avoid underfitting.

- ✓Use L1 regularization when sparsity and interpretability matter. Tune lambda carefully to avoid removing useful features. Combine with L2 (elastic net) if needed. Evaluate impact on validation accuracy before and after enabling L1.

- ✓Add dropout to reduce co-adaptation in large models. Begin with p≈0.5 in large hidden layers and adjust based on validation results. Remember: apply dropout only during training, not inference. If training slows too much, reduce p or apply dropout to fewer layers.

- ✓Insert batch normalization to stabilize and speed training, especially in deeper nets. Initialize gamma=1 and beta=0. BN often lets you use a higher learning rate. If batch sizes are tiny, monitor BN statistics carefully; consider larger batches if possible.

- ✓Normalize or standardize inputs and handle outliers before training. Neural networks are sensitive to input scale; consistent scaling improves optimization. If features have wildly different ranges, the model may train very slowly or not at all. Proper preprocessing is a must-have step.

- ✓Initialize weights carefully: use He initialization for ReLU-like activations and Xavier for tanh/sigmoid. Avoid zero initialization for weights to prevent symmetry issues. Check early activation distributions to ensure healthy propagation. Adjust initialization if gradients vanish or explode.

- ✓Monitor both training and validation losses throughout. Divergence between them signals overfitting; add regularization or reduce capacity. If both are high, the model may be underfitting; increase capacity or train longer. Use these signals to guide hyperparameter changes.

- ✓Choose architecture size based on data and task complexity. More layers and neurons require more data and regularization. Start with a modest architecture and scale up if underfitting. Avoid oversized models unless you have strong regularization and sufficient data.

- ✓Pick batch size thoughtfully: smaller batches add noise that can help generalization; larger batches are smoother but might require learning rate tweaks. Ensure batch sizes are compatible with batch norm for stable statistics. Watch GPU memory constraints. Adjust batch size alongside learning rate for best results.

- ✓Keep a clean separation of train/validation/test and avoid validation leakage. Tune hyperparameters only on validation performance. Use a test set once at the end to estimate final generalization. Ensure the validation set matches deployment data as closely as possible.

Glossary

Loss function

A loss function is a number that tells us how wrong the model’s predictions are compared to the true answers. Lower loss means better predictions. It’s the target we try to minimize during training. By adjusting the model’s parameters to reduce this number, we improve the model. Without it, we wouldn’t know how to change the model to get better.

Parameters (theta)

Parameters are the adjustable numbers inside a neural network, like the weights and biases of its connections. They decide how input signals are transformed as they pass through layers. During training, we change these numbers to make the loss smaller. Good parameter values lead to good predictions. Too many parameters can overfit if we lack regularization.

Gradient descent (GD)

Gradient descent is a method to reduce loss by moving parameters in the direction that lowers it the most. We compute the gradient (slope) of the loss and step opposite to it. The step size is set by the learning rate. Repeating this moves us downhill on the loss landscape. If steps are too big, we can overshoot; too small, and progress is slow.

Stochastic Gradient Descent (SGD)

SGD updates parameters using the gradient computed from a small random batch of data rather than the entire dataset. This makes each step faster and adds noise that can help escape bad local minima. It’s the standard way to train large models. The learning rate still controls step size. Batch size impacts gradient noise and stability.

Mini-batch

A mini-batch is a small subset of the training data used to compute an approximate gradient. It balances speed and stability: smaller batches are faster but noisier, larger batches are smoother but costlier. Mini-batches fit well on GPUs. They also work naturally with batch normalization. The choice of size affects generalization.

Backpropagation

Backpropagation is a fast way to compute gradients of the loss with respect to all parameters. It applies the chain rule from calculus layer by layer, starting from the output and moving backward. It reuses intermediate results from the forward pass to save compute. Without backprop, training deep networks would be too slow. It’s the engine of modern deep learning.

Learning rate (eta)

The learning rate tells how big a step we take in parameter space each update. Big learning rates speed things up but can cause instability. Small ones are safer but slow. A schedule that shrinks the learning rate over time often works best. Picking eta is one of the most important tuning choices.

Momentum

Momentum adds a velocity term that accumulates past gradients to smooth and speed up learning. If gradients consistently point in a direction, momentum increases step sizes along that path. If they flip, momentum slows the change. This reduces zig-zags and accelerates movement along shallow directions. It is controlled by a coefficient mu.

+25 more (click terms in content)